The study found a segment of respondents who expressed positive views about everything – even when that meant giving seemingly contradictory answers. This suggests untrustworthy data that stands to bias poll estimates. If a nontrivial share of respondents seek out positive answer choices and always selected them (e.g., on the assumption that it is a market research survey and/or that doing so would please the researcher), that could systematically bias approval ratings upward. The study included seven questions in which respondents could answer that they “approve” or “favor” something. Specifically, the survey asked:

- Do you approve or disapprove of the job Donald Trump is doing as President?

- What is your overall opinion of U.S. President Donald Trump?14

- What is your overall opinion of British Prime Minister Theresa May?

- What is your overall opinion of Russian President Vladimir Putin?

- What is your overall opinion of German Chancellor Angela Merkel?

- What is your overall opinion of French President Emmanuel Macron?

- Do you approve or disapprove of the health care law passed by Barack Obama and Congress in 2010?

If respondents are answering carefully, it would be unusual to express genuine, favorable views of Emmanuel Macron, Angela Merkel, the Affordable Care Act (ACA), Theresa May, Donald Trump and Vladimir Putin. The first half of the list tend to draw support from left-leaning audiences while the latter are more popular with conservative audiences.

If respondents are answering carefully, it would be unusual to express genuine, favorable views of Emmanuel Macron, Angela Merkel, the Affordable Care Act (ACA), Theresa May, Donald Trump and Vladimir Putin. The first half of the list tend to draw support from left-leaning audiences while the latter are more popular with conservative audiences.

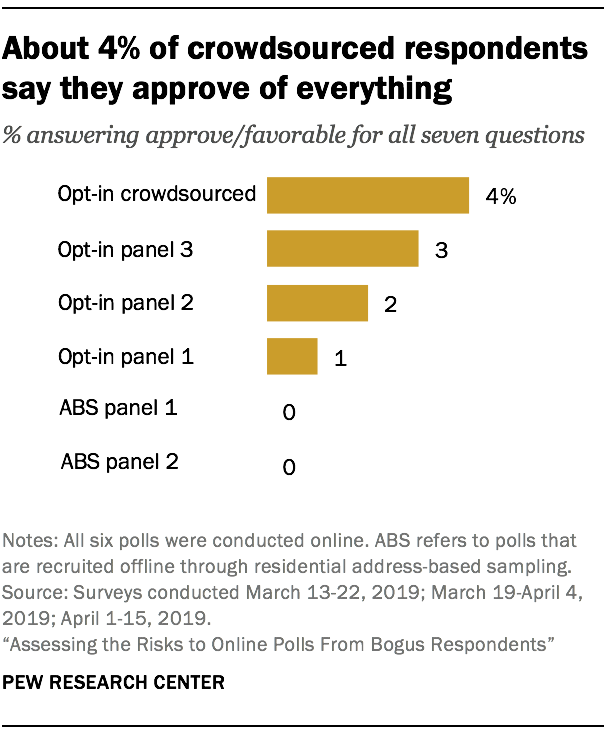

The study found 2% of respondents gave an approve or favorable response to each of these seven questions. The rate was highest in the crowdsourced poll (4%) followed by all three opt-in panels (ranging from 1% to 3%). There were a few such respondents in the address-recruited polls, but as share of the total their incidence rounds to 0%. Researchers confirmed that this behavior was purposeful – not simply a primacy effect – in a follow-up experiment in which the order of responses was randomized (see Chapter 8).

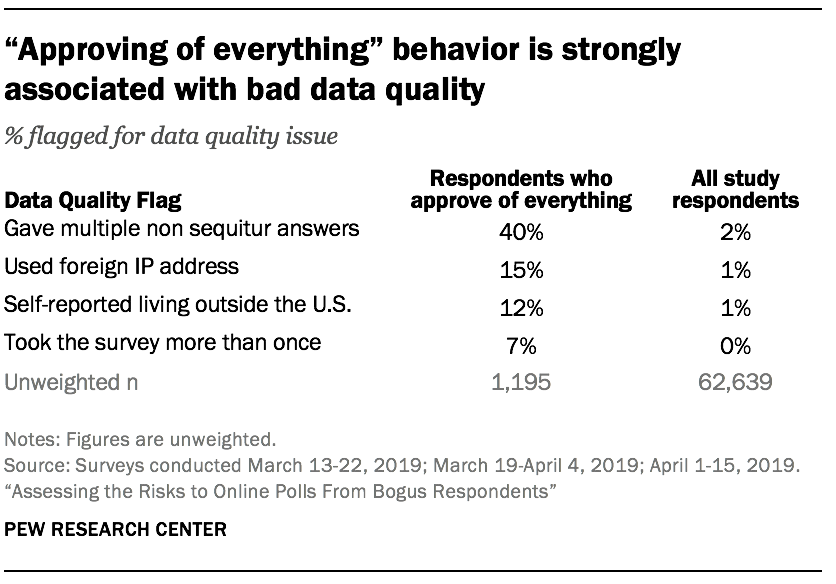

While approving of everything might seem benign, it was strongly associated with bad data quality. About one-in-seven (15%) respondents who approved of everything had an IP address from outside the U.S. About 7% of always-approving respondents took the survey multiple times, and a sizable share (40%) gave multiple non sequitur answers to the open-ended question. The rates of all these behaviors are significantly higher than among all the study respondents.

While approving of everything might seem benign, it was strongly associated with bad data quality. About one-in-seven (15%) respondents who approved of everything had an IP address from outside the U.S. About 7% of always-approving respondents took the survey multiple times, and a sizable share (40%) gave multiple non sequitur answers to the open-ended question. The rates of all these behaviors are significantly higher than among all the study respondents.

This always-approve behavior is related to giving unsolicited positive product-type evaluations in the open-ended questions. Among the 413 respondents who answered an open-end with a positive product evaluation-sounding answer, half (50%) answered “approve”/”favorable” all seven times on the closed-ended questions.15

While some of these respondents may have been answering honestly, a more plausible explanation is that this pattern represents error. Critically, this error is not mere “noise” but rather systematically changes the poll results.