Today, many decisions that could be made by human beings – from interpreting medical images to recommending books or movies – can now be made by computer algorithms with advanced analytic capabilities and access to huge stores of data. The growing prevalence of these algorithms has led to widespread concerns about their impact on those who are affected by decisions they make. To proponents, these systems promise to increase accuracy and reduce human bias in important decisions. But others worry that many of these systems amount to “weapons of math destruction” that simply reinforce existing biases and disparities under the guise of algorithmic neutrality.

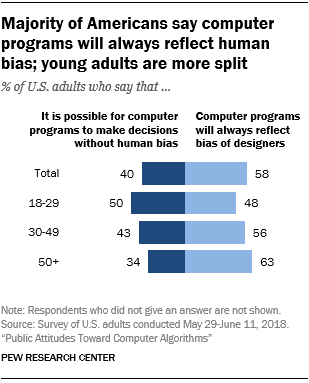

This survey finds that the public is more broadly inclined to share the latter, more skeptical view. Roughly six-in-ten Americans (58%) feel that computer programs will always reflect the biases of the people who designed them, while 40% feel it is possible for computer programs to make decisions that are free from human bias. Notably, younger Americans are more supportive of the notion that computer programs can be developed that are free from bias. Half of 18- to 29-year-olds and 43% of those ages 30 to 49 hold this view, but that share falls to 34% among those ages 50 and older.

This general concern about computer programs making important decisions is also reflected in public attitudes about the use of algorithms and big data in several real-life contexts.

To gain a deeper understanding of the public’s views of algorithms, the survey asked respondents about their opinions of four examples in which computers use various personal and public data to make decisions with real-world impact for humans. They include examples of decisions being made by both public and private entities. They also include a mix of personal situations with direct relevance to a large share of Americans (such as being evaluated for a job) and those that might be more distant from many people’s lived experiences (like being evaluated for parole). And all four are based on real-life examples of technologies that are currently in use in various fields.

The specific scenarios in the survey include the following:

- An automated personal finance score that collects and analyzes data from many different sources about people’s behaviors and personal characteristics (not just their financial behaviors) to help businesses decide whether to offer them loans, special offers or other services.

- A criminal risk assessment that collects data about people who are up for parole, compares that data with that of others who have been convicted of crimes, and assigns a score that helps decide whether they should be released from prison.

- A program that analyzes videos of job interviews, compares interviewees’ characteristics, behavior and answers to other successful employees, and gives them a score that can help businesses decide whether job candidates would be a good hire or not.

- A computerized resume screening program that evaluates the contents of submitted resumes and only forwards those meeting a certain threshold score to a hiring manager for further review about reaching the next stage of the hiring process.

For each scenario, respondents were asked to indicate whether they think the program would be fair to the people being evaluated; if it would be effective at doing the job it is designed to do; and whether they think it is generally acceptable for companies or other entities to use these tools for the purposes outlined.

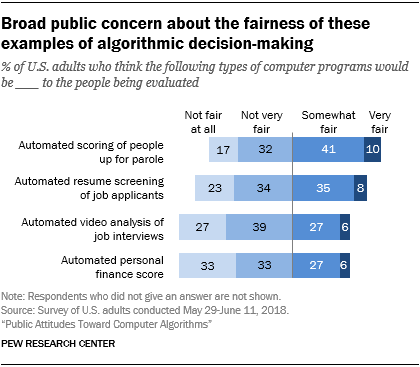

Sizable shares of Americans view each of these scenarios as unfair to those being evaluated

Americans are largely skeptical about the fairness of these programs: None is viewed as fair by a clear majority of the public. Especially small shares think the “personal finance score” and “video job interview analysis” concepts would be fair to consumers or job applicants (32% and 33%, respectively). The automated criminal risk score concept is viewed as fair by the largest share of Americans. Even so, only around half the public finds this concept fair – and just one-in-ten think this type of program would be very fair to people in parole hearings.

Demographic differences are relatively modest on the question of whether these systems are fair, although there is some notable attitudinal variation related to race and ethnicity. Blacks and Hispanics are more likely than whites to find the consumer finance score concept fair to consumers. Just 25% of whites think this type of program would be fair to consumers, but that share rises to 45% among blacks and 47% among Hispanics. By contrast, blacks express much more concern about a parole scoring algorithm than do either whites or Hispanics. Roughly six-in-ten blacks (61%) think this type of program would not be fair to people up for parole, significantly higher than the share of either whites (49%) or Hispanics (38%) who say the same.

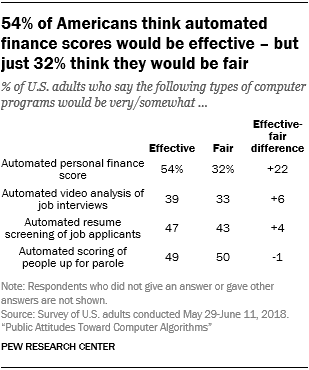

The public is mostly divided on whether these programs would be effective or not

The public is relatively split on whether these programs would be effective at doing the job they are designed to do. Some 54% think the personal finance score program would be effective at identifying good customers, while around half think the parole rating (49%) and resume screening (47%) algorithms would be effective. Meanwhile, 39% think the video job interview concept would be a good way to identify successful hires.

For the most part, people’s views of the fairness and effectiveness of these programs go hand in hand. Similar shares of the public view these concepts as fair to those being judged, as say they would be effective at producing good decisions. But the personal finance score concept is a notable exception to this overall trend. Some 54% of Americans think this type of program would do a good job at helping businesses find new customers, but just 32% think it is fair for consumers to be judged in this way. That 22-percentage-point difference is by far the largest among the four different scenarios.

Majorities of Americans think the use of these programs is unacceptable; concerns about data privacy, fairness and overall effectiveness highlight their list of worries

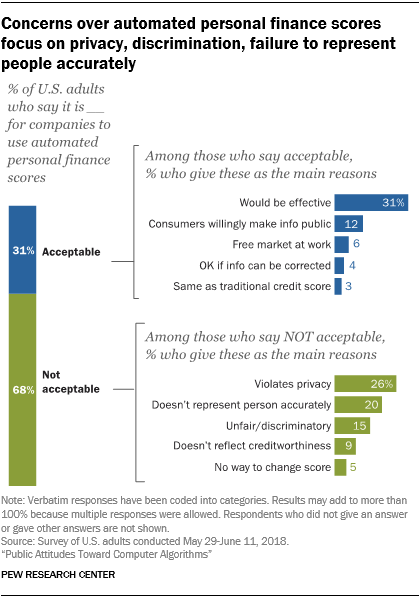

Majorities of the public think it is not acceptable for companies or other entities to use the concepts described in this survey. Most prominently, 68% of Americans think using the personal finance score concept is unacceptable, and 67% think it is unacceptable for companies to conduct computer-aided video analysis of interviews when hiring job candidates.

The survey asked respondents to describe in their own words why they feel these programs are acceptable or not, and certain themes emerged in these responses. Those who think these programs are acceptable often focus on the fact that they would be effective at doing the job they purport to do. Additionally, some argue in the case of private sector examples that the concepts simply represent the company’s prerogative or the free market at work.

Meanwhile, those who find the use of these programs to be unacceptable often worry that they will not do as good a job as advertised. They also express concerns about the fairness of these programs and in some cases worry about the privacy implications of the data being collected and shared. The public reaction to each of these concepts is discussed in more detail below.

Automated personal finance score

Among the 31% of Americans who think it would be acceptable for companies to use this type of program, the largest share of respondents (31%) feel it would be effective at helping companies find good customers. Smaller shares say customers have no right to complain about this practice since they are willingly putting their data out in public with their online activities (12%), or that companies can do what they want and/or that this is simply the free market at work (6%).

Here are some samples of these responses:

Here are some samples of these responses:

- “I believe that companies should be able to use an updated, modern effort to judge someone’s fiscal responsibility in ways other than if they pay their bills on time.” Man, 28

- “Finances and financial situations are so complex now. A person might have a bad credit score due to a rough patch but doesn’t spend frivolously, pays bills, etc. This person might benefit from an overall look at their trends. Alternately, a person who sides on trends in the opposite direction but has limited credit/good credit might not be a great choice for a company as their trends may indicate that they will default later.” Woman, 32

- “[It’s] simple economics – if people want to put their info out there….well, sucks to be them.” Man, 29

- “It sounds exactly like a credit card score, which, while not very fair, is considered acceptable.” Woman, 25

- “Because it’s efficient and effective at bringing businesses information that they can use to connect their services and products (loans) to customers. This is a good thing. To streamline the process and make it cheaper and more targeted means less waste of resources in advertising such things.” Man, 33

The 68% of Americans who think it is unacceptable for companies to use this type of program cite three primary concerns. Around one-quarter (26%) argue that collecting this data violates people’s privacy. One-in-five say that someone’s online data does not accurately represent them as a person, while 9% make the related point that people’s online habits and behaviors have nothing to do with their overall creditworthiness. And 15% feel that it is potentially unfair or discriminatory to rely on this type of score.

Here are some samples of these responses:

- “Opaque algorithms can introduce biases even without intending to. This is more of an issue with criminal sentencing algorithms, but it can still lead to redlining and biases against minority communities. If they were improved to eliminate this I’d be more inclined to accept their use.” Man, 46

- “It encroaches on someone’s ability to freely engage in activities online. It makes one want to hide what they are buying – whether it is a present for a friend or a book to read. Why should anyone have that kind of access to know my buying habits and take advantage of it in some way? That kind of monitoring just seems very archaic. I can understand why this would be done, from their point of view it helps to show what I as a customer would be interested in buying. But I feel that there should be a line of some kind, and this crosses that line.” Woman, 27

- “I don’t think it is fair for companies to use my info without my permission, even if it would be a special offer that would interest me. It is like spying, not acceptable. It would also exclude people from receiving special offers that can’t or don’t use social media, including those from lower socioeconomic levels.” Woman, 63

- “Algorithms are biased programs adhering to the views and beliefs of whomever is ordering and controlling the algorithm … Someone has made a decision about the relevance of certain data and once embedded in a reviewing program becomes irrefutable gospel, whether it is a good indicator or not.” Man, 80

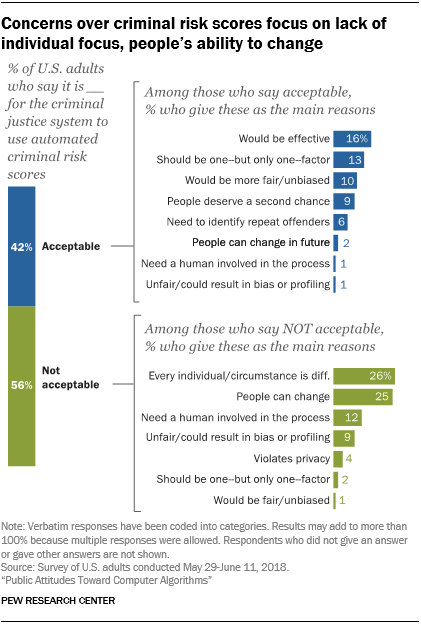

Automated criminal risk score

The 42% of Americans who think the use of this type of program is acceptable mention a range of reasons for feeling this way, with no single factor standing out from the others. Some 16% of these respondents think this type of program is acceptable because it would be effective or because it’s helpful for the justice system to have more information when making these decisions. A similar share (13%) thinks this type of program would be acceptable if it is just one part of the decision-making process, while one-in-ten think it would be fairer and less biased than the current system.

In some cases, respondents use very different arguments to support the same outcome. For instance, 9% of these respondents think this type of program is acceptable because it offers prisoners a second chance at being a productive member of society. But 6% support it because they think it would help protect the public by keeping potentially dangerous individuals in jail who might otherwise go free.

Some examples:

- “Prison and law enforcement officials have been doing this for hundreds of years already. It is common sense. Now that it has been identified and called a program or a process [that] does not change anything.” Man, 71

- “Because the other option is to rely entirely upon human decisions, which are themselves flawed and biased. Both human intelligence and data should be used.” Man, 56

- “Right now, I think many of these decisions are made subjectively. If we can quantify risk by objective criteria that have shown validity in the real world, we should use it. Many black men are in prison, it is probable that with more objective criteria they would be eligible for parole. Similarly, other racial/ethnic groups may be getting an undeserved break because of subjective bias. We need to be as fair as possible to all individuals, and this may help.” Man, 81

- “While such a program would have its flaws, the current alternative of letting people decide is far more flawed.” Man, 42

- “As long as they have OTHER useful info to make their decisions then it would be acceptable. They need to use whatever they have available that is truthful and informative to make such an important decision!” Woman, 63

The 56% of Americans who think this type of program is not acceptable tend to focus on the efficacy of judging people in this manner. Some 26% of these responses argue that every individual or circumstance is different and that a computer program would have a hard time capturing these nuances. A similar share (25%) argues that this type of system precludes the possibility of personal growth or worries that the program might not have the best information about someone when making its assessment. And around one-in-ten worry about the lack of human involvement in the process (12%) or express concern that this system might result in unfair bias or profiling (9%).

Some examples:

- “People should be looked at and judged as an individual, not based on some compilation of many others. We are all very different from one another even if we have the same interests or ideas or beliefs – we are an individual within the whole.” Woman, 71

- “Two reasons: People can change, and data analysis can be wrong.” Woman, 63

- “Because it seems like you’re determining a person’s future based on another person’s choices.” Woman, 46

- “Information about populations are not transferable to individuals. Take BMI [body mass index] for instance. This measure was designed to predict heart disease in large populations but has been incorrectly applied for individuals. So, a 6-foot-tall bodybuilder who weighs 240 lbs is classified as morbidly obese because the measure is inaccurate. Therefore, information about recidivism of populations cannot be used to judge individual offenders.” Woman, 54

- “Data collection is often flawed and difficult to correct. Algorithms do not reflect the soul. As a data scientist, I also know how often these are just wrong.” Man, 36

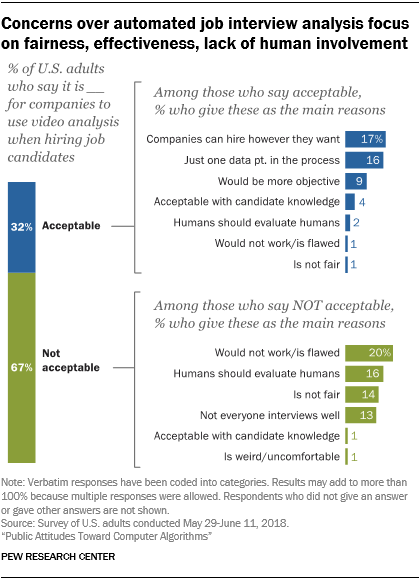

Video analysis of job candidates

Two themes stand out in the responses of the 32% of Americans who think it is acceptable to use this tool when hiring job candidates. Some 17% of these respondents think companies should have the right to hire however they see fit, while 16% think it is acceptable because it’s just one data point among many in the interview process. Another 9% think this type of analysis would be more objective than a traditional person-to-person interview.

Some examples:

- “All’s fair in commonly accepted business practices.” Man, 76

- “They are analyzing your traits. I don’t have a problem with that.” Woman, 38

- “Again, in this fast-paced world, with our mobile society and labor market, a semi-scientific tool bag is essential to stay competitive.” Man, 71

- “As long as the job candidate agrees to this format, I think it’s acceptable. Hiring someone entails a huge financial investment and this might be a useful tool.” Woman, 61

- “I think it’s acceptable to use the product during the interview. However, to use it as the deciding factor is ludicrous. Interviews are tough and make candidates nervous, therefore I think that using this is acceptable but poor if used for final selection.” Man, 23

Respondents who think this type of process is unacceptable tend to focus on whether it would work as intended. One-in-five argue that this type of analysis simply won’t work or is flawed in some general way. A slightly smaller share (16%) makes the case that humans should interview other humans, while 14% feel that this process is just not fair to the people being evaluated. And 13% feel that not everyone interviews well and that this scoring system might overlook otherwise talented candidates.

Some examples:

- “I don’t think that characteristics obtained in this manner would be reliable. Great employees can come in all packages.” Woman, 68

- “Individuals may have attributes and strengths that are not evident through this kind of analysis and they would be screened out based on the algorithm.” Woman, 57

- “A person could be great in person but freeze during such an interview (on camera). Hire a person not a robot if you are wanting a person doing a job. Interviews as described should only be used for persons that live far away and can’t come in and then only to narrow down the candidates for the job, then the last interview should require them to have a person to person interview.” Woman, 61

- “Some people do not interview well, and a computer cannot evaluate a person’s personality and how they relate to other people.” Man, 75

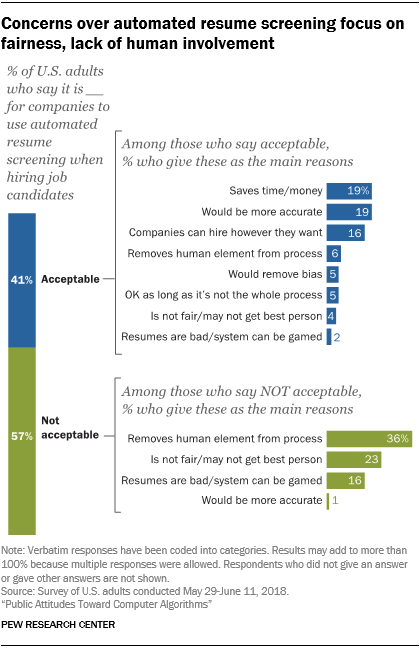

Automated resume screening

The 41% of Americans who think it is acceptable for companies to use this type of program give three major reasons for feeling this way. Around one-in-five (19%) find it acceptable because the company using the process would save a great deal of time and money. An identical share thinks it would be more accurate than screening resumes by hand, and 16% feel that companies can hire however they want to hire.

Some examples:

- “Have you ever tried to sort through hundreds of applications? A program provides a non-partial means of evaluating applicants. It may not be perfect, but it is efficient.” Woman, 65

- “While I wouldn’t do this for my company, I simply think it’s acceptable because private companies should be able to use whatever methods they want as long as they don’t illegally discriminate. I happen to think some potentially good candidates would be passed over using this method, but I wouldn’t say an organization shouldn’t be allowed to do it this way.” Man, 50

- “If it eliminates resumes that don’t meet criteria, it allows the hiring process to be more efficient.” Woman, 43

Those who find the process unacceptable similarly focus on three major themes. Around one-third (36%) worry that this type of process takes the human element out of hiring. Roughly one-quarter (23%) feel that this system is not fair or would not always get the best person for the job. And 16% worry that resumes are simply not a good way to choose job candidates and that people could game the system by putting in keywords that appeal to the algorithm.

Here are some samples of these responses:

- “Again, you are taking away the human component. What if a very qualified person couldn’t afford to have a professional resume writer do his/her resume? The computer would kick it out.” Woman, 72

- “Companies will get only employees who use certain words, phrases, or whatever the parameters of the search are. They will miss good candidates and homogenize their workforce.” Woman, 48

- “The likelihood that a program kicks a resume, and the human associated with it, for minor quirks in terminology grows. The best way to evaluate humans is with humans.” Man, 54

- “It’s just like taking standardized school tests, such as the SAT, ACT, etc. There are teaching programs to help students learn how to take the exams and how to ‘practice’ with various examples. Therefore, the results are not really comparing the potential of all test takers, but rather gives a positive bias to those who spend the time and money learning how to take the test.” Man, 64