The American Trends Panel (ATP), created by Pew Research Center, is a nationally representative panel of randomly selected U.S. adults recruited from landline and cellphone random-digit-dial (RDD) surveys. Panelists participate via monthly self-administered web surveys. Panelists who do not have internet access are provided with a tablet and wireless internet connection. The panel is being managed by Abt SRBI.

Data in this report are drawn from the panel wave conducted Jan. 9-23, 2017, among 4,248 respondents. The margin of sampling error for the full sample of respondents is plus or minus 2.9 percentage points.

Members of the ATP were recruited from two large, national landline and cellphone RDD surveys conducted in English and Spanish. At the end of each survey, respondents were invited to join the panel. The first group of panelists was recruited from the 2014 Political Polarization and Typology Survey, conducted Jan. 23 to March 16, 2014. Of the 10,013 adults interviewed, 9,809 were invited to take part in the panel and a total of 5,338 agreed to participate.7 The second group of panelists was recruited from the 2015 Survey on Government, conducted Aug. 27 to Oct. 4, 2015. Of the 6,004 adults interviewed, all were invited to join the panel, and 2,976 agreed to participate.8

The ATP data were weighted in a multistep process that begins with a base weight incorporating the respondents’ original survey selection probability and the fact that in 2014 some panelists were subsampled for invitation to the panel. Next, an adjustment was made for the fact that the propensity to join the panel and remain an active panelist varied across different groups in the sample. The final step in the weighting uses an iterative technique that aligns the sample to population benchmarks on a number of dimensions. Gender, age, education, race, Hispanic origin and region parameters come from the U.S. Census Bureau’s 2015 American Community Survey. The county-level population density parameter (deciles) comes from the 2010 U.S. decennial census. The telephone service benchmark comes from the January-June 2016 National Health Interview Survey and is projected to 2017. The volunteerism benchmark comes from the 2015 Current Population Survey Volunteer Supplement. The party affiliation benchmark is the average of the three most recent Pew Research Center general public telephone surveys. The internet access benchmark comes from the 2015 Pew Survey on Government. Respondents who did not previously have internet access are treated as not having internet access for weighting purposes. Sampling errors and statistical tests of significance take into account the effect of weighting. Interviews are conducted in both English and Spanish, but the Hispanic sample in the ATP is predominantly native born and English speaking.

This report compares some internet-related estimates from 2017 to estimates for the same questions measured in 2014 and discusses change over time. In order to make the estimates comparable and isolate real change in the public during this time, it was necessary to account for an administrative change in the ATP survey panel. In 2014 the panel used mail mode for adults without home internet or without email. By 2017 the panel provided these adults with tablets and internet access so that all interviewing is now conducted online. In the 2014 survey, 106 panelists who had access to the internet but chose to take their surveys by mail were not asked questions about internet use and online experiences. These cases have been excluded from the analysis in this report, and the data have been reweighted. The weights for the 2017 survey were also adjusted to ensure comparability between the 2014 and 2017 samples with respect to the share of the population with internet access. As a result, estimates in this report differ slightly from estimates in other reports that use these data, but these estimates best measure real change in the public from 2014 to 2017.

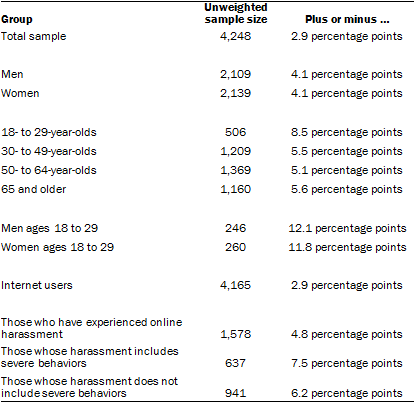

The following table shows the unweighted sample sizes and the error attributable to sampling that would be expected at the 95% level of confidence for different groups in the survey:

Sample sizes and sampling errors for other subgroups are available upon request.

In addition to sampling error, one should bear in mind that question wording and practical difficulties in conducting surveys can introduce error or bias into the findings of opinion polls.

Open-ended responses have been edited for punctuation and clarity.

The January 2017 wave had a response rate of 81% (4,248 responses among 5,268 individuals in the panel). Taking account of the combined, weighted response rate for the recruitment surveys (10.0%) and attrition from panel members who were removed at their request or for inactivity, the cumulative response rate for the wave is 2.7 %.9

Shareable quotes from Americans on online harassment

Shareable quotes from Americans on online harassment