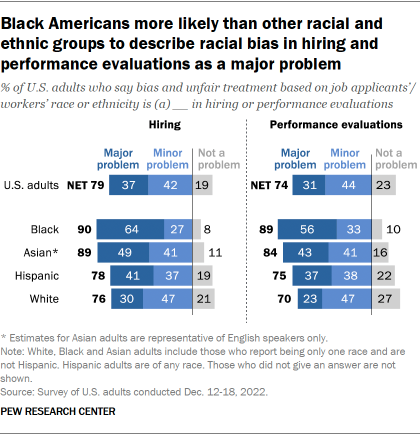

Majorities of White, Black, Hispanic and Asian Americans say racial and ethnic bias in hiring practices and performance evaluations is a problem. But they differ over how big of a problem it is, according to a Pew Research Center survey of U.S. adults conducted in December 2022.

Pew Research Center conducted this study to understand Americans’ views of artificial intelligence and its uses in workplace hiring and monitoring. For this analysis, we surveyed 11,004 U.S. adults from Dec. 12 to 18, 2022.

Everyone who took part in the survey is a member of the Center’s American Trends Panel (ATP), an online survey panel that is recruited through national, random sampling of residential addresses. This way, nearly all U.S. adults have a chance of selection. The survey is weighted to be representative of the U.S. adult population by gender, race, ethnicity, partisan affiliation, education and other categories. Read more about the ATP’s methodology. Here are the questions used for this analysis, along with responses, and its methodology.

This survey includes a total sample size of 371 Asian adults. The sample primarily includes English-speaking Asian adults and, therefore, may not be representative of the overall Asian adult population. Despite this limitation, Asian adults’ responses are incorporated into the general population figures throughout this analysis.

This analysis also featured open-ended quotes from respondents that have been slightly edited for length and clarity.

Some 64% of Black adults describe bias and unfair treatment based on a job applicant’s race or ethnicity as a major problem, compared with 49% of Asian and 41% of Hispanic adults and an even smaller share of White adults (30%).

A similar pattern appears when Americans are asked about unfair treatment in evaluating workers. Some 56% of Black Americans see racial or ethnic bias as a major problem in worker evaluations, compared with about four-in-ten Asian and Hispanic adults and 23% of White adults.

In recent years, companies have sought to address diversity and equity issues in the workplace, including by updating their recruiting strategies and hiring diversity officers. Some are also turning to artificial intelligence for help. While proponents believe AI can circumvent human biases in the hiring process, critics argue these systems simply reinforce preexisting prejudices.

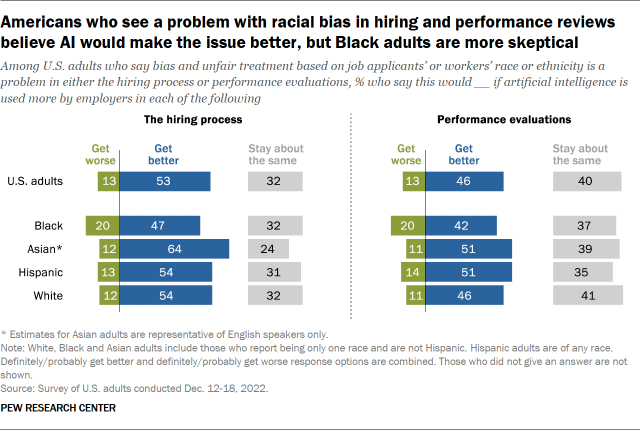

This survey finds that many Americans who see racial and ethnic bias as a problem in hiring believe AI could lead to more equitable practices. About half of those who see racial bias as a problem in hiring (53%) believe that it would get better if employers used AI more in the hiring process, while just 13% say it would get worse. About a third (32%) say this problem would stay about the same with the increased use of AI.

Across all racial and ethnic groups surveyed, pluralities of those who view bias in hiring as a problem say AI would improve rather than worsen this issue. But Black Americans stand out as the most skeptical. Some 20% of Black adults who see racial bias and unfair treatment in hiring as a problem say AI would make things worse, compared with about one-in-ten Hispanic, Asian and White adults.

When it comes to bias in evaluating workers’ performance, larger shares also say AI would be more helpful than harmful at addressing this issue. Overall, among those who see racial and ethnic bias in performance evaluations as a problem, more say AI would improve rather than worsen the situation (46% vs. 13%). Black adults who view this as an issue are again more likely than other groups to say these biases would worsen with increased use of AI.

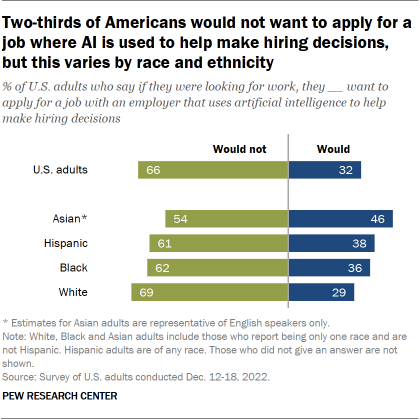

Americans’ willingness to apply for jobs with employers that use AI in hiring

Many employers use AI in some form in their hiring and workplace decision-making, according to the Equal Employment Opportunity Commission. However, Americans are apprehensive about applying for a job with an employer that uses AI in this way, according to the Center’s survey. A majority of Americans (66%) say they would not want to do this, while 32% would be open to it.

There are some racial and ethnic differences in openness to applying for a job with an employer that uses AI in hiring decisions. Asian, Hispanic and Black Americans are more likely than those who are White to say that they would apply to such an employer.

When asked to explain their reasoning in an open-ended question, some respondents on both sides of the issue cited potential bias, including specific mentions of race.

Among those who said they would want to apply for a job that uses AI in the hiring process, some spoke of their own identity as a reason for their openness. One Black woman in her 60s explained: “I am a person of color. An employer will see that before a careful study of my credentials and probably be biased on hiring or on pay scale. If AI is programmed to identify qualifications and allocate appropriate salary regardless of race or gender, so much workplace discrimination would end.”

And a White woman in her 70s said she thought AI could mitigate bias: “AI would not discriminate based on sex or race. Humans do that all the time.”

But those who would not apply for this type of job balk at the idea that AI would be more equitable. A White woman in her 30s said, “The biases and prejudices (e.g., race, gender, region) of the programmer will be quietly passed on to the AI despite the hiring company expecting a completely neutral program.”

A Hispanic man in his 40s echoed these sentiments: “AI reflects the biases of humanity. AI is not a savior of equality but a mask to embed the racism and sexism that already exists.”

Note: Here are the questions used for the analysis and its methodology.