In order to gain a fuller understanding of what types of researchers are using Mechanical Turk, Pew Research Center conducted a detailed content analysis of HIT groups posted during the week of Dec. 7-11, 2015. The Center captured and analyzed the most recently posted HIT groups three times a day for each of those five days. In all, 2,123 different HIT groups from 294 different requesters were analyzed. (See the Methodology for a detailed explanation of the capture and coding process.)

Because the only public information about requesters is the screen name of the person or group posting a HIT group, categorization of these requesters is challenging. For this project, Pew Research Center placed requesters into categories based on as much contextual information that was available. For some requesters, the categorization was simple because their screen names clearly revealed their identity.

When a requester’s name was not as clear, the Center used other clues to make determinations, including Google searches and the keywords offered by the requester in the task description. Many academics can be located through their personal websites, and keywords such as “research” or “psychology” gave further context. (All of these categorizations were based on the assumption that those who post tasks to MTurk are being truthful when identifying themselves. There is no independent way to confirm whether this was true.)

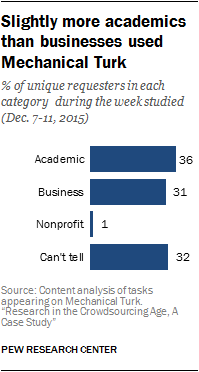

The requesters were categorized into four distinct groups: “academic,” “business,” “nonprofit” and “can’t tell.” Even when using all the contextual information available along with web searches, about a third of the requesters (32%) had generic names that could not be identified. These included names such as “HCTEST,” “Roseanna,” and “Heather.”

Of the requesters who could be identified, slightly more academics than companies used the site

During the week studied, Pew Research Center found that 36% of the unique requesters were either academic groups, professors or graduate students. That was slightly more than the 31% which were businesses. Identifiable nonprofits were barely represented at 1%.

During the week studied, Pew Research Center found that 36% of the unique requesters were either academic groups, professors or graduate students. That was slightly more than the 31% which were businesses. Identifiable nonprofits were barely represented at 1%.

While the total number of academics and businesses were fairly close, the details of how each type of group used the site were very different.

A few companies made up the majority of business on MTurk during the week of Pew Research Center’s study

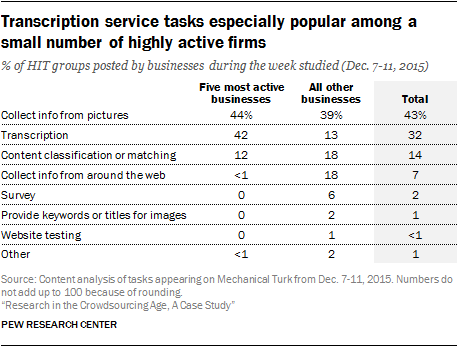

The businesses that use MTurk tend to be comparatively active. Even though they made up a smaller proportion of the requesters during the December period of analysis, the amount of work they posted was much greater. Businesses accounted for one-third of the requesters posting tasks (31%), but their work accounted for 83% of the HIT groups posted, compared with only 9% for academics.

The distribution of work from the business community was very top heavy. In fact, just a small number of companies made up the majority of the work posted on the site. The top five most active requesters, which were all businesses, accounted for more than half of all the HIT groups posted for the week (53%).

For these companies, Turkers may have become part of their regular workforce and the use of Mechanical Turk may be an important part of their business model. These companies posted identical tasks on a daily basis.

The most active requester was the CEO of a company that specializes in producing real-time sales data. That firm accounted for 19% of the total HIT groups during the period of our analysis with an average of 79 new HIT groups posted per day. This company provides data based on the behaviors of their research panel. One major component of the company’s process is to have shoppers take a picture of their sales receipts and then have Turkers transcribe the purchases listed on those receipts in a standardized format.

Virtually all of the HITs posted by this firm during the study were a version of this transcription process. For example, on Dec. 10, the company posted 78 HIT groups, nearly all of which had virtually the same title and description.

The next two largest requesters were both transcription services for audio or video files. Together they accounted for 22% of all the HIT groups in the December study timeframe.

These requests were often for transcription of lengthy audio or video files. For example, a Dec. 14 request asked for a Turker to transcribe an audio file that was 44.5 minutes long. The assignment was to be completed in less than six hours for a payment of $16.

While most transcription requests asked Turkers to transcribe files themselves, some of these services asked Turkers to “approve” or “edit” written transcripts that had already been produced. In this way, they were using the site to double-check the accuracy of other transcribers.

Beyond those top five businesses, there were 86 other businesses that posted on the site, which accounted for 42% of the overall HIT groups that week. These businesses used MTurk for transcriptions along with a wide variety of other types of tasks. One voice talent service, for example, asked Turkers to check whether audio samples submitted by actors met a set of defined criteria. Another business asked Turkers to “grade and correct” tasks completed by others. Specifically, workers were asked to verify URLs for businesses that had been found by other Turkers.

Beyond those top five businesses, there were 86 other businesses that posted on the site, which accounted for 42% of the overall HIT groups that week. These businesses used MTurk for transcriptions along with a wide variety of other types of tasks. One voice talent service, for example, asked Turkers to check whether audio samples submitted by actors met a set of defined criteria. Another business asked Turkers to “grade and correct” tasks completed by others. Specifically, workers were asked to verify URLs for businesses that had been found by other Turkers.

Not all of the businesses that used MTurk did so regularly throughout the week. About half of the businesses (53 of them) posted only one or two HIT groups, suggesting they saw MTurk as a place for help with occasional tasks rather than as part of their regular workforce. But those companies combined to make up only a small portion of the overall activity in this period (3%).

In the period studied, academics used MTurk as a survey tool for nonrepresentative samples; those who did typically had one project to complete

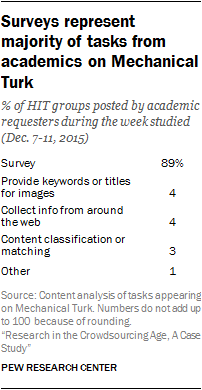

These findings indicate that researchers and academics use the site differently from businesses: In general, members of the academic community use Mechanical Turk as a way to conduct inexpensive surveys or recruit study participants, even if those surveys are nonrandom samples.

These findings indicate that researchers and academics use the site differently from businesses: In general, members of the academic community use Mechanical Turk as a way to conduct inexpensive surveys or recruit study participants, even if those surveys are nonrandom samples.

During this time period, there were 107 different groups and individuals that could be identified as being from the academic community. More than two-thirds of those academic requesters (70%) only posted a single task during the week. On the other hand, 7% posted five times or more. In addition, the vast majority of tasks posted by academics in this period were surveys (89%). Compared with traditional methods of surveys and panel experiments, MTurk is much cheaper and sometimes easier to use.

For academics who used MTurk for something other than a survey, their HIT groups were generally part of other types of experiments. Some researchers asked Turkers to search for a term on Google and report how many ads they saw on the page, while others asked Turkers to conduct visual experiments.