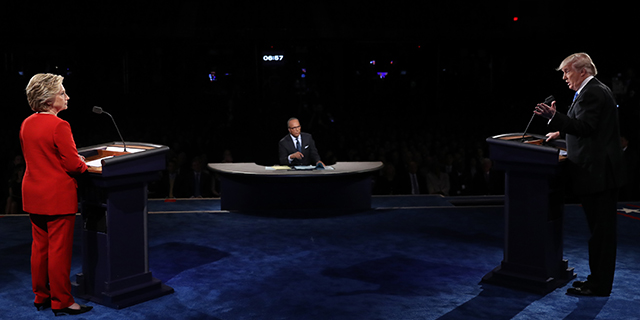

Prize fights and Olympic contests have judges, but debates between candidates for public office in the U.S. are ultimately judged by the voters. In the aftermath of presidential debates, there is intense interest in gauging “who won.” How can we know the answer to that question?

Pollsters answer in two ways: sample surveys of debate viewers, and comparisons of before-and-after polling about the candidates. By these measures, the results of the first two presidential debates were similar – sample surveys of debate viewers generally indicated that Hillary Clinton had won, and national polls tended to show support for Clinton holding steady or improving in the days following the debates. But after both debates, some supporters of Donald Trump – and the candidate himself – pointed to surveys conducted among visitors to news organizations’ websites, many of which found majorities saying Trump prevailed.

How should voters make sense of the flurry of polls that claim to tell us who won? Here is a brief overview of different ways of judging the debates.

Surveys of debate viewers

For those in need of an immediate answer to the question of “who won,” polls are conducted the night of the debate among a sample of those who watched. Typically, a pollster will ask respondents in a survey conducted prior to the debate if they intend to watch and whether they would be willing to be interviewed immediately after the debate. In one such CNN/ORC poll conducted after the first presidential debate on Sept. 26, Clinton was called the winner over Trump by a margin of 62%-27%.

Such polls have the advantage of capturing reactions quickly, before the opinions of pundits and journalists can begin to form a consensus as to what happened in the debate – a consensus that can influence public opinion. But these kinds of polls do have weaknesses. The people who consent to an interview and who cooperate may not be a representative sample of all debate watchers – for example, one candidate’s supporters may be more likely to say they will watch or participate in the poll. Pollsters could weight these samples to better reflect the division of opinion about the candidates in the survey from which they are drawn, but they don’t always do so, since it is a survey of “debate watchers” and not intended to reflect the full electorate.

Quick reactions to events are not always indicative of the ultimate impact of the events. The discussion of the debate among journalists and other observers can shape subsequent public opinion by pointing out factual or logical errors made by the candidates or simply by declaring a winner.

Comparisons of pre- and post-debate polls

If the true impact of a debate reflects the reaction of both the debate viewers and the broader public who hear about the debate after the fact, a comparison of polls before and after the debate may provide a better gauge of its effect. But even here, timing is important because the impact of debates may be temporary and a debate, however important, may not be the only thing affecting voters during a particular period of the campaign.

At this late stage of the presidential campaign, the number of people who are undecided or willing to change from one candidate to another is pretty small – though the relatively large number of people supporting candidates other than Trump or Clinton and the unpopularity of the candidates could mean that the pool of persuadable voters is somewhat larger than normal this year. But even among the decided, debates can energize supporters of one candidate and discourage the other candidate’s followers. This can have an impact on voter turnout, though the effects can decay over time.

Swings in the relative level of enthusiasm among the candidates’ supporters may also affect their willingness to participate in polls, which can lead to swings in the polls even in the absence of meaningful change in the underlying pattern of candidate support. Pew Research Center’s phone survey conducted after the first presidential debate in 2012 found Mitt Romney gaining 6 points and Barack Obama losing 5 points, a pattern also seen in other polling conducted around the same time. However, panel surveys – where the same individuals are interviewed at multiple points in time – suggest that much of the shift in voter sentiment was an illusion, as Democrats were suddenly less willing to be interviewed. In light of this phenomenon, observers should be cautious in interpreting post-debate shifts in voter preference. By the measure of pre- and post-debate polls, Clinton did well in the first debate. Although few of the polls show large increases in support for her, nearly all national polls and most state polls show her in a better position now than before the debate. While these results are consistent with the findings of the instant response polls, they might overstate the impact of the debate in the same way that polls in 2012 may have after the first presidential debate.

Website reaction polls

After each debate, Trump has pointed to polls conducted at several news organization and opinion websites to make the claim that he won. The number of participants in these polls far exceeded the sample sizes of the sample surveys cited above.

What these polls have in common is that there is no control exercised over who participates. Sample surveys, whether based on random samples or internet respondents recruited to panels or to specific surveys, are conducted either by phone or on private, restricted-access websites, not publicly available sites where all visitors can vote. Apart from other differences, the procedures followed in polls limited to controlled samples prevent a “stuffing the ballot box” phenomenon, where people can be rallied to take part in a particular poll to demonstrate support for a person or a cause. In the age of social media, this is a serious concern, especially with respect to online reviews of products and services.

There is evidence that such an effort was made to manipulate user response polls after the first presidential debate to create the impression that Trump was the winner. Members of pro-Trump online communities at Reddit and 4chan were encouraged by fellow Trump supporters to cast multiple votes and were provided with URLs for dozens of these polls. Even without active manipulation, these types of opt-in surveys are highly susceptible to bias, something that has been seen since the early years of online surveys. When President Bill Clinton in August 1998 acknowledged his affair with a White House intern, a large opt-in online poll among more than 100,000 AOL subscribers found a small majority saying he should resign. Sample surveys conducted at the same time found majorities ranging from 61% to 71% saying that he should not.

All methods of judging public reaction to events like candidate debates have their weaknesses, but well-designed sample surveys can provide useful evidence about how voters judged the candidates. Polls that do not control who can participate or how frequently they can do so, however, have no scientific value.