The longer a survey is, the fewer people are willing to complete it. This is especially true for surveys conducted online, where attention spans can be short. While there is no magic length that an online survey should be, Pew Research Center caps the length of its online American Trends Panel (ATP) surveys at 15 minutes, based on prior research.

But how can we know how long a survey will take to complete before it’s been taken? This is where question counting rules come in. In this post, we’ll explain how Pew Research Center developed and now uses these rules to keep our online surveys from running too long.

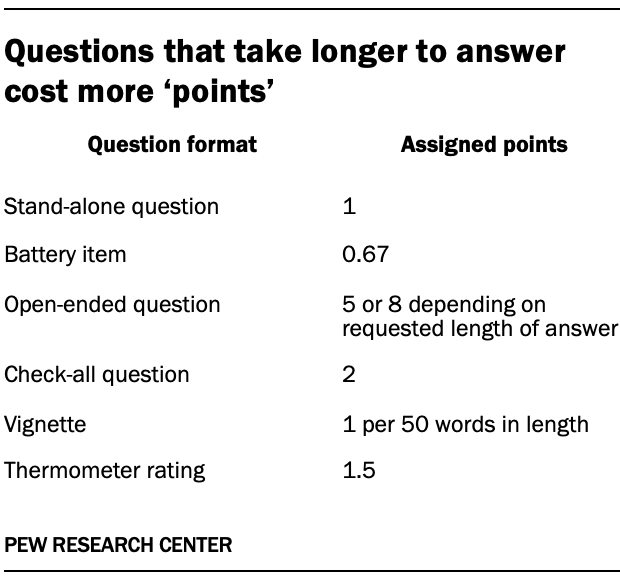

In a nutshell, we classify survey questions based on their format. Each question format has a “point” value reflecting how long it usually takes people to answer. Formats that people tend to answer quickly (e.g., a single item in a larger battery) have a lower point value than questions that require more time (e.g., an open-ended question where respondents are asked to write in their own answers). A 15-minute ATP survey is budgeted at 85 points, so before the survey begins, researchers sum up all of the question point values to make sure the total is 85 or less.

The Center’s point system was developed using historical ATP response times. When administering an online survey, researchers can see how long it takes each respondent to answer the questions on each screen. This information has allowed us to determine, for example, that more challenging open-ended questions can take people minutes to answer, while an ordinary stand-alone question takes only about 10 seconds or so.

Question types

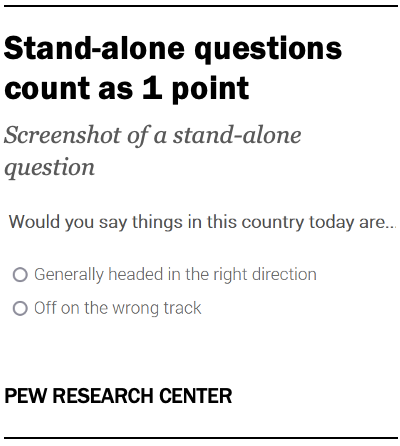

Stand-alone questions

Stand-alone questions are the most common type on the ATP. They are likely what comes to mind when someone imagines a classic survey experience – a straightforward question followed by a set of answer choices. In our counting scheme, these questions count as one point.

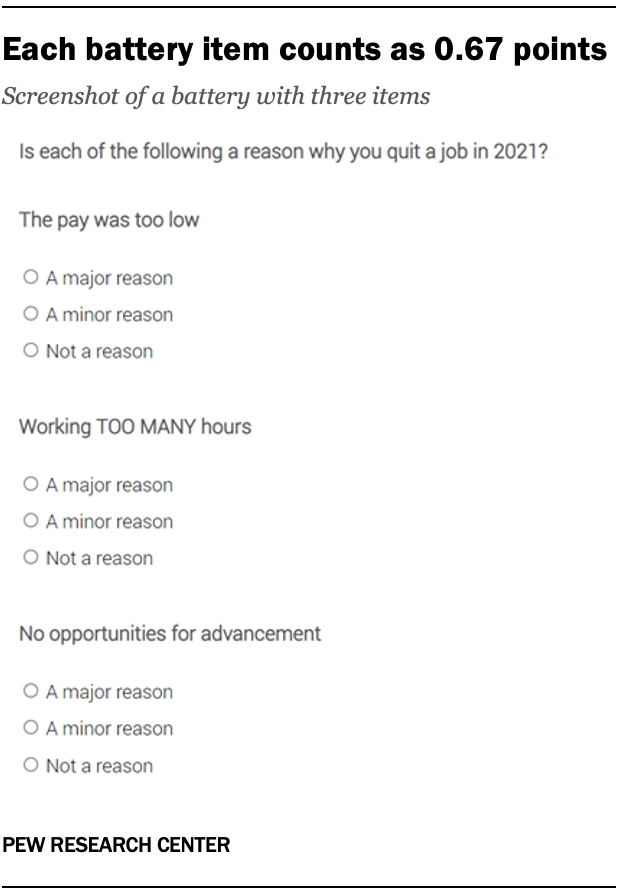

Battery items

Battery items are another popular form of survey question. Batteries refer to a series of questions with the same stem (e.g., “What is your overall opinion of…”), followed by a variety of answer options. Each answer option is assigned two-thirds of a point (0.67 points) in our question counting rules. For example, a battery of five items would count as 5 x 0.67, or 3.35 points in total.

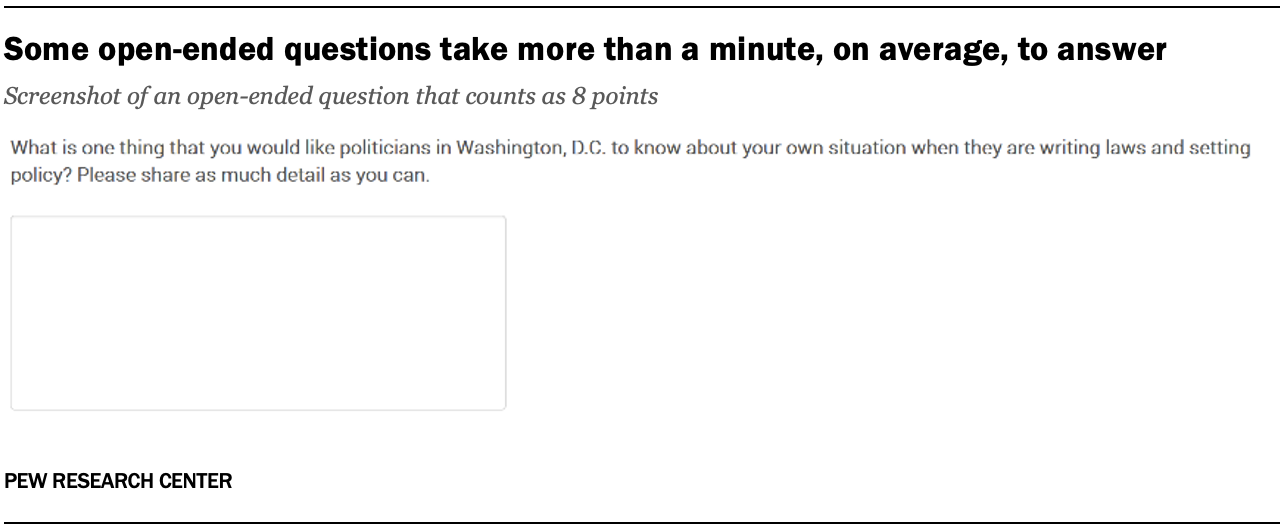

Open-ended questions

Open-ended questions are among the most difficult and time-consuming for respondents to answer. They are also the most likely type for respondents to skip without answering. Open-ended questions require respondents to form answers themselves rather than selecting from the options that are provided. While a standard stand-alone question can be answered in a matter of seconds, open-ended questions often take a minute or more to answer. As a result, these questions have markedly higher points assigned to them in the question counting guidelines.

But not all open-ended questions are created equal. Over the years, we’ve noticed that some take much longer to answer than others. For example, questions like, “In a sentence or two, please describe why you think Americans’ level of confidence in the federal government is a very big problem” would take respondents much longer to answer than a question like, “Who would be your choice for the Republican nomination for president?” Questions that ask for more detail are assigned eight points, while questions that are more straightforward are assigned five points.

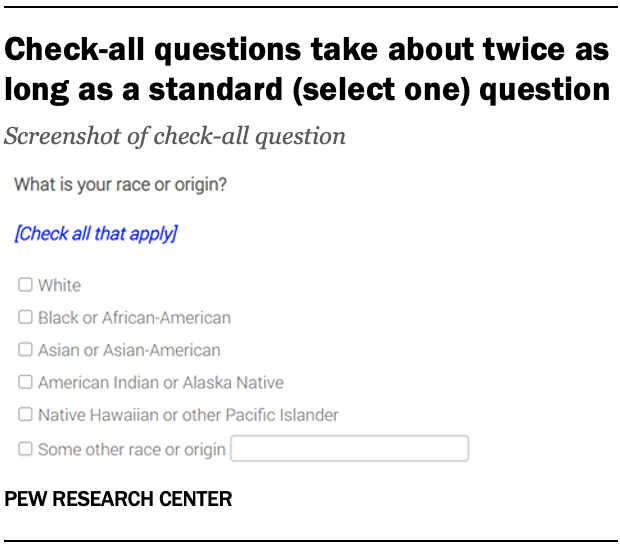

Check-all questions

Check-all questions ask the respondent to select all response options that apply to them. These questions can yield less accurate data than stand-alone questions, so they are rarely used on the ATP. Check-all questions count for two points.

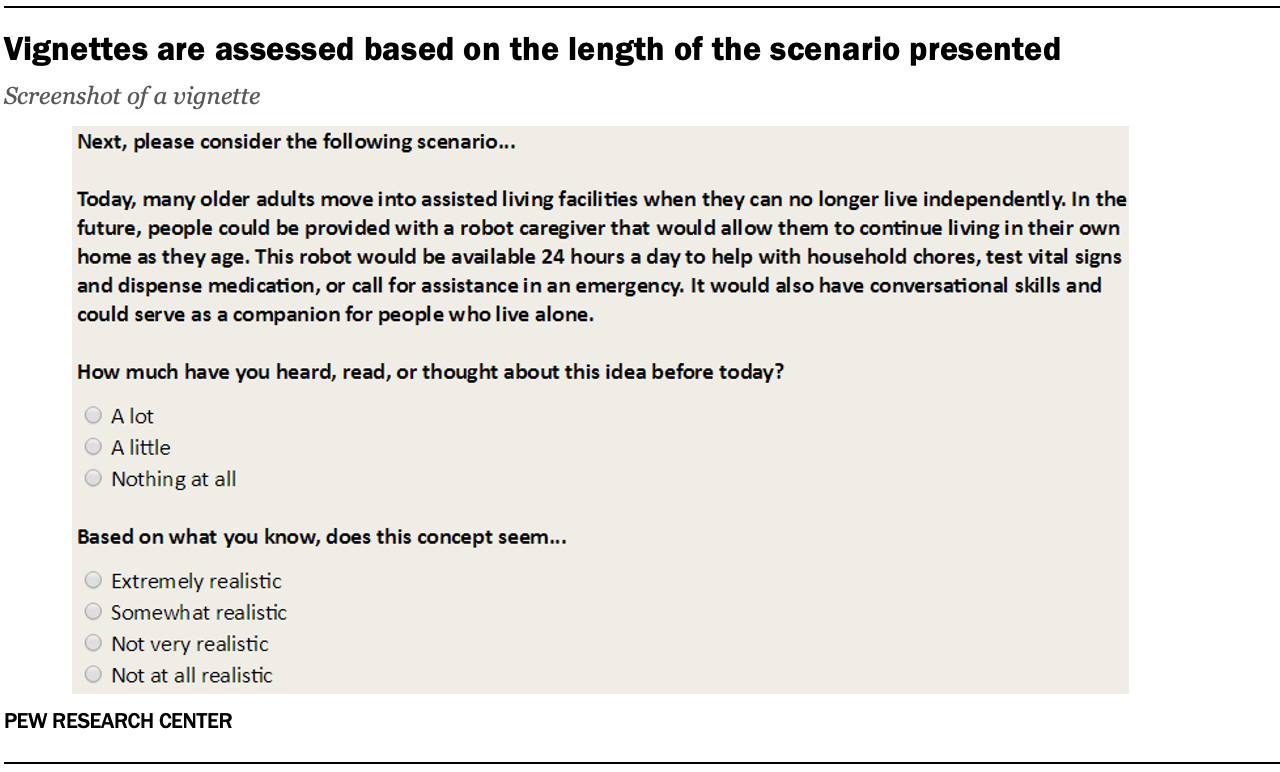

Vignettes

Vignettes present respondents with a hypothetical scenario and then ask questions about that scenario. Typically, at least one detail of the scenario is changed for a random subset of the respondents, allowing researchers to determine how that detail affects people’s answers.

From a survey length standpoint, vignettes are notable for showing respondents a block of text describing a particular scenario. They entail more reading than the other question types. Vignettes are rare on the ATP and are budgeted one point for every 50 words in length.

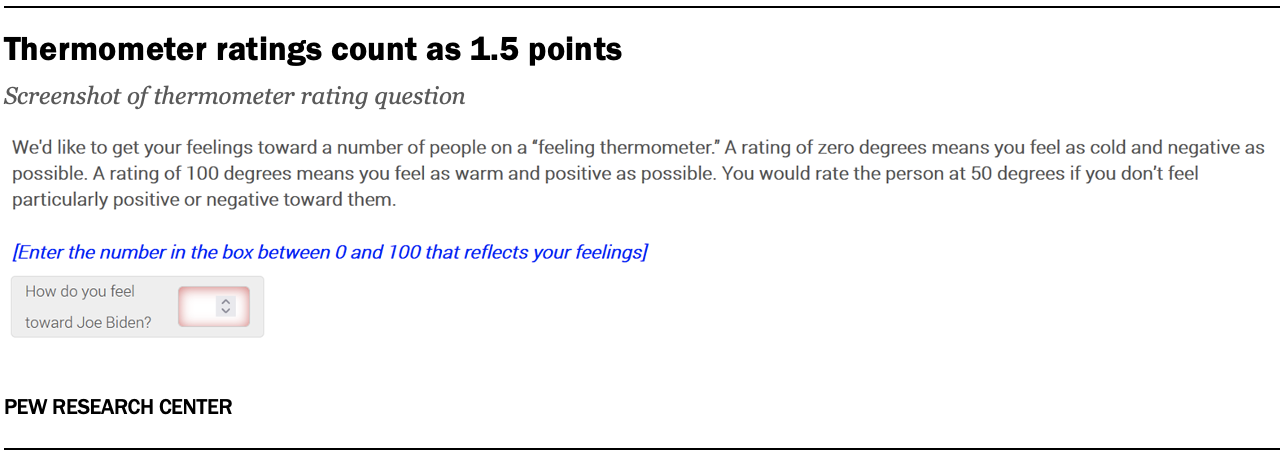

Thermometer ratings

Feeling thermometers ask respondents to rate something on a scale from 0 to 100, in which 0 represents the coldest, most negative view and 100 represents the warmest and most positive.

They are budgeted for 1.5 points because they are more difficult than a question with discrete answer options. It can sometimes be challenging for respondents to map their opinion of someone or something onto a 0-100 scale.

Conclusion

Thanks to these question counting rules, the incentives we offer for survey completion and the goodwill and patience of our panelists, about 80% or more of the respondents selected for each survey complete the questionnaire. In other words, the survey-level response rate is usually 80% or higher. Among the narrower set of people who log on to the survey and complete at least one item, about 99% typically complete the entire questionnaire. That is, the break-off rate is usually about 1%.